Classification with O-PLS-DA

Partial least squares (PLS) is a versatile algorithm which can be used to predict either continuous or discrete/categorical variables. Classification with PLS is termed PLS-DA, where the DA stands for discriminant analysis. The PLS-DA algorithm has many favorable properties for dealing with multivariate data; one of the most important of which is how variable collinearity is dealt with, and the model’s ability to rank variables’ predictive capacities within a multivariate context. Orthogonal signal correction PLS-DA or O-PLS-DA is an extension of PLS-DA which seeks to maximize the explained variance between groups in a single dimension or the first latent variable (LV), and separate the within group variance (orthogonal to classification goal) into orthogonal LVs. The variable loadings and/or coefficient weights from a validated O-PLS-DA model can be used to rank all variables with respect to their performance for discriminating between groups. This can be used part of a dimensional reduction or feature selection task which seek to identify the top predictors for a given model.

Partial least squares (PLS) is a versatile algorithm which can be used to predict either continuous or discrete/categorical variables. Classification with PLS is termed PLS-DA, where the DA stands for discriminant analysis. The PLS-DA algorithm has many favorable properties for dealing with multivariate data; one of the most important of which is how variable collinearity is dealt with, and the model’s ability to rank variables’ predictive capacities within a multivariate context. Orthogonal signal correction PLS-DA or O-PLS-DA is an extension of PLS-DA which seeks to maximize the explained variance between groups in a single dimension or the first latent variable (LV), and separate the within group variance (orthogonal to classification goal) into orthogonal LVs. The variable loadings and/or coefficient weights from a validated O-PLS-DA model can be used to rank all variables with respect to their performance for discriminating between groups. This can be used part of a dimensional reduction or feature selection task which seek to identify the top predictors for a given model.

Like with most predictive modeling or forecasting tasks, model validation is a critical requirement. Otherwise the produced models maybe overfit or perform no better than coin flips. Model validation is the process of defining the models performance, and thus ensuring that the model’s internal variable rankings are actually informative.

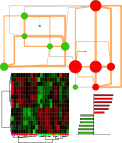

Below is a demonstration of the development and validation of an O-PLS-DA multivariate classification model for the famous Iris data set.

O-PLS-DA model validation Tutorial

- Data pretreatment and preparation

- Model optimization

- Permutation testing

- Internal cross-validation

- External cross-validation

The Iris data only contains 4 variables, but the sample sizes are favorable for demonstrating a two tiered testing and training scheme (internal and external cross-validation). However O-PLS really shines when building models with many correlated variables (coming soon).

In this model, should variables be gaussian? I have variables that are not gaussian even after transformations. Thanks.

November 28, 2014 at 3:22 pm

There is no assumption of normality in PLS. However lack thereof will be evident in the distribution of the model’s sample scores.

November 28, 2014 at 4:09 pm

In the permutation testing, the code OSC.validate.model(model=mods,perm=permuted.stats) returns an error. Can you please fix this.

Also for the internal cross-validation, can you please elaborate more on why that particular result suggests a strong model and what does the p-value calculate? Thx

April 8, 2015 at 5:48 pm

Hi,

I just posted an updated O-PLS modeling example at https://imdevsoftware.wordpress.com/2015/04/09/o-pls-example-and-trial-server/.

The default model p-value is the penalized ratio between the number of times the permuted null model is better than the actual model (permutation p-value).

-D

April 9, 2015 at 12:53 am

Hi,

I’m trying to apply your demo to single cell RNAseq data for multi-class classification problem, and I have some simple questions.

1. As output of model testing, can we consider the $predicted.Y as scores to assign each cell to a group?

2. What is the difference between $loadings, $loading.weights and $coefficients?

3. .. And lastly, could you give me the reference for citation?

Thank you very very much in advance

best

Madeleine

July 11, 2016 at 10:45 am

Hi,

To answer your questions:

1. The $predicted.Y is for the training data; there is also an object returned for the test data which is a better estimate of the model quality.

2. loadings and loading weights are similar an refer to each variable’s contribution to the reconstruction of the sample scores for each latent variable (LV). Coefficients are the cumulative contribution of variables across all latent variables (up to referenced LV).

3. Reference:

Book: Chemometrics with R by Ron Wehrens (DOI 10.1007/978-3-642-17841-2) discussed in section “11.2 Orthogonal Signal Correction and OPLS” pages 240-244.

I would recommend you also take a look at random forest models for multi-class classification. These have many favorable properties over OPLS (e.g. non linear, robust to over-fitting). I would specifically look at the caret R package implementation: http://caret.r-forge.r-project.org/

July 23, 2016 at 12:22 am